At Electric Well.

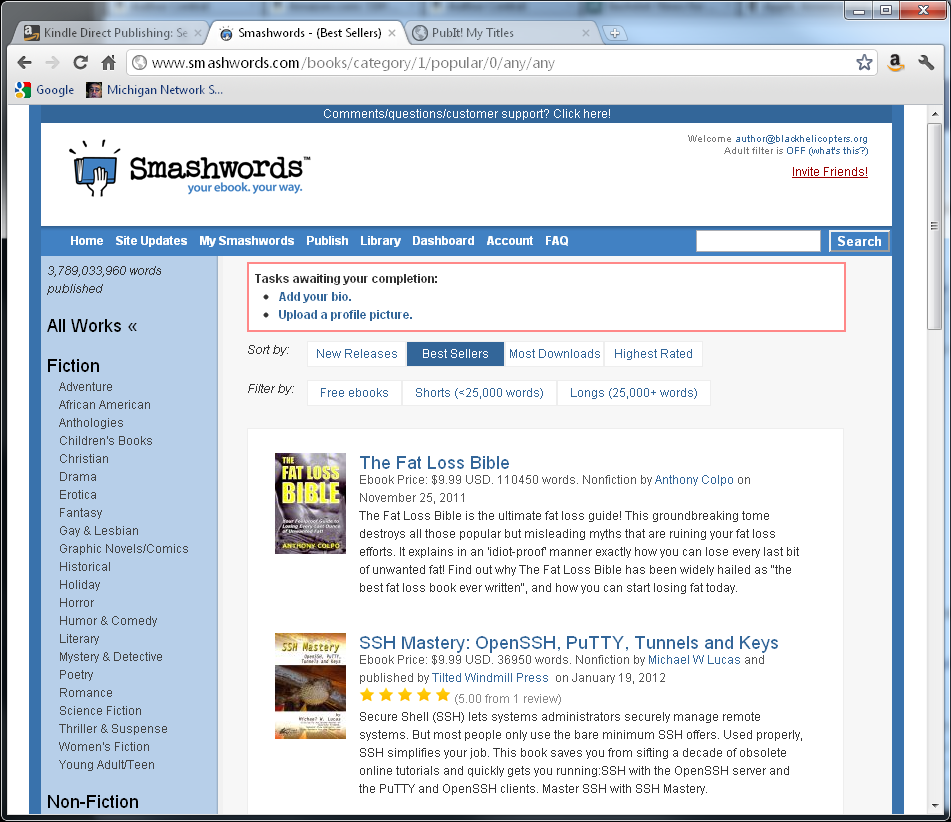

SSH Mastery now #2 best-seller on Smashwords

Smashwords is an ebook retailer that sells books in ten different electronic formats in one purchase. If you want a book in .pdb for your Palm Pilot, in PDF for your laptop, and epub for your Nook, that’s where you go.

I just saw this in their best-seller list.

I’m at #2 site-wide, right behind the Fat Loss Bible.

The implications are obvious: I must write a book for fat techies. I’ll make a fortune!

SSH Mastery Review from Peter Hansteen

Peter has already read and reviewed SSH Mastery. While a few of my readers have been kind enough to post reviews on Amazon and Smashwords (which I very deeply appreciate), Peter’s is the first long review.

And here I should confess something: The very existence of SSH Mastery is Peter’s fault.

Peter will be doing the tech review of Absolute OpenBSD 2nd Edition. He looked over the outline and said “You need more SSH in here. You need SSH here, and here. More SSH love!” So, I listened to him. The SSH content overflowed the OpenBSD book from a planned 350K words to closer to 400K. I can’t comfortably read a 400K-word book. So, something had to give. And it was SSH.

And to again answer what people keep emailing me and asking: yes, a print version is coming. Yes, I am writing AO2e. When I have dates, I will announce them.

SSH Mastery Round-Up

I went to bed last night, satisfied that I had gotten SSH Mastery uploaded to the various ebook sites. I figured that I’d contact some people about doing reviews this weekend, maybe generate one or two sales. Awoke to discover ten copies sold while I slept. And I received a whole bunch of messages via Twitter, Facebook, and email. Rather than try to answer them all individually, I decided to answer here.

If you’ve bought the book: thank you! Please consider leaving a review on your ebook site and/or Amazon, it would seriously help me out.

SSH Mastery is currently available via Smashwords and Kindle, and Nook. The Nook version seems to be missing it’s cover, I’ll take that up with B&N once I post this.

Want it in your preferred format? Permit me to direct you to Smashwords. Buy the book once, get it in any or all of ten different formats, from epub to PDF to old formats like PalmDoc and LRF. It doesn’t sync to your device, but you can read it anywhere, and it’s stored “In The Cloud (ooooh!)”. There is no DRM, on any version where I control DRM. SSH Mastery is only $9.99. If someone goes to the trouble to illicitly download a tightly-focused, task-specific tech book that’s less expensive than lunch, well, they suck. Please tell them that.

Once Smashwords finishes digesting the book, they will feed it to iBooks, Kobo, and all the other online retailers. I have no insight into how long this will take. If you sight SSH Mastery on iBooks or Kobo, please let me know! Actually, I’m shocked that Smashwords was able to process the highly-formatted original document. Their Meatgrinder only takes Microsoft Word files, and my file was full of headers and in-document hyperlinks and text styles and images. It’s obviously much improved over the early days. Following their instructions works. Amazing, that.

There will be a print version. The print layout person works from the same files I feed to the ebookstores. The print will take time. She will lay out a chapter for me, so that I can approve a rough design. She will then lay out the entire book. That will give us a page count and let me do the index. We’ll proof that a few times, to catch any errors, and then kick it out to the printer. But I didn’t want to delay the ebook until the print was ready.

The page count is critical. Page count dictates the price. I’m 90% confident of the price, but I can’t announce it until I know. Once I have the price, we can start taking pre-orders. Now, I don’t have the infrastructure to take pre-orders. Any number of third-party companies would hold your money in escrow until I delivered the books to them. That would take a whole bunch of legal agreements, and frankly, I’m too dang lazy to be bothered.

Especially when the OpenSSH/OpenBSD folks already have that infrastructure, and they have an existing trust relationship with the community. I plan to let them have the books at my cost plus expenses (shipping and CreateSpace fulfillment costs, not sunk costs), to funnel some money into OpenSSH. CreateSpace is doing the printing, so I don’t think I can offer an exclusivity window — once I order a crate of books, Amazon will list and ship to their direct customers. But I will ship those books at the earliest opportunity.

I’m also looking for a solution to let me sell print/ebook combinations. That’s how I like my books, after all. I can work out a cost-effective solution that doesn’t involve me hand-mailing books, I’ll do it.

But you want the book now. You really do. Mind you, I know all of my readers are good people. You don’t use passwords with SSH. You tightly secured all of your SSH servers. You know when and how to forward ports, and X11, and when to use a SSH VPN. But you know people who need this book. You know people who think that SSH-ing in as root with a password is a good idea. Make them buy the book. For their own good.

SSH Mastery available at Smashwords

To my surprise, SSH Mastery is available at Smashwords.

I don’t know if this version will make it through to Kobo and iBooks, but you can buy it now. If I have to update it to get the book through the Smashwords Meatgrinder and into third-party stores, you’d get access to those later versions as well.

SSH Mastery ebook uploaded to Amazon and B&N

I just finished uploading the ebook versions of SSH Mastery to Amazon and Barnes & Noble. The manuscript is en route to the print layout person.

Amazon should have the book available in 24 hours or so, Barnes & Noble in 24-72 hours. Once they’re available, I’ll be able to inspect the ebooks to check for really egregious errors. The files were clean when I uploaded them, but both companies perform their own manipulation on what I feed them. There’s no way to be sure the books come out okay until I can see the final product.

What about, say, iBooks? Kobo? The short answer is: they’re coming. The long answer is: those sites are fed via Smashwords. Smashwords only accepts Microsoft Word files, and they have very strict controls on how books can be formatted. Their ebook processor, Meatgrinder, isn’t exactly friendly to highly-formatted books. I must spend some quality quantity time getting the book into Smashwords.

I’ll post again when the books are available on each site. In the meantime, I’m going to go put my feet up.

Consistency in Writing

For the last couple of weeks, the SSH Mastery copyeditor has said “There’s something wrong with Chapter 13, but I can’t figure out what it is.” I told her that I had confidence in her ability to figure it out and to just do her best. (I wasn’t actually confident, but telling her that would have guaranteed that she would not have found it.) The copyedits came back this weekend, along with the following table. Continue reading “Consistency in Writing”

New fiction collection: “Vicious Redemption”

My first collection of short fiction, Vicious Redemption: Five Horror Stories, has started to appear in online bookstores. So far it’s available at Amazon, Barnes & Noble, and Smashwords.

Today it’s ebook only. I have a few marketing things to finalize before it goes to print.

Within a couple weeks, it should appear in other online bookstores. Ebook distribution is faster than physical distribution, but still slower than you’d think. I expect it to be in Kobo & Apple by the end of the month.

Would you enjoy these stories? The first story from the collection, Wednesday’s Seagulls, is posted on my personal web site. Go read it and find out.

I’m giving out review copies to my regular readers. If you normally read this sort of thing, and if you’re willing to read it and post a review on Amazon (as well as anywhere else you’d like), drop me an email.

enable DNSSec resolution on BIND 9.8.1

With BIND 9.8, enabling DNSSec resolution and verification is now so simple and low-impact there’s absolutely no reason to not do it. Ignore the complicated tutorials filling the Internet. DNSSec is very easy on recursive servers.

DNS is the weak link in Internet security. Someone who can forge DNS entries in your server can use that to leverage his way further into your systems. DNSSec (mostly) solves this problem. Deploying DNSSec on your own domains is still fairly complicated, but telling a BIND DNS server to check for the presence of DNSSec is now simple.

In BIND 9.8.1 and newer (included with FreeBSD 9 and available for dang near everything else), add the following entries to your named.conf file.

options {

...

dnssec-enable yes;

dnssec-validation auto;

...

};

This configuration uses the predefined trust anchor for the root zone, which is what most of us should use.

Restart named. You’re done. If a domain is protected with DNSSec, your DNS server will reject forged entries.

To test everything at once, configure your desktop to use your newly DNSSec-aware resolver and browse to http://test.dnssec-or-not.org/. This gives you a simple yes or no answer. Verified DNSSec is indicated in dig(1) output by the presence of the ad (authenticated data) flag.

For the new year, add two lines to your named.conf today. Get all the DNSSec protection you can. Later, I’ll discuss adding DNSSec to authoritative domains.

SSH Mastery Cover Rough

Here’s the cover rough for SSH Mastery, by my pet artist the highly talented Bradley K McDevitt. If you see anything wrong with it, please say so now.